Spark recommendations in excess of huge level directors that simplify it to gather equivalent applications. Additionally, you can use it instinctively from the, Python, R, and SQL shells. Spark controls a heap of libraries including SQL and b for man-made intelligence, Chart, and Flash Streaming. You can join these libraries impeccably in a comparable application. Shimmer works with the execution of both iterative computations, which visit their enlightening assortment on different events all around, and clever/exploratory data examination, i.e., the reiterated informational collection style addressing of data. The idleness of such applications may be diminished by a couple of critical degrees stood out from Apache Guide Lessen execution.

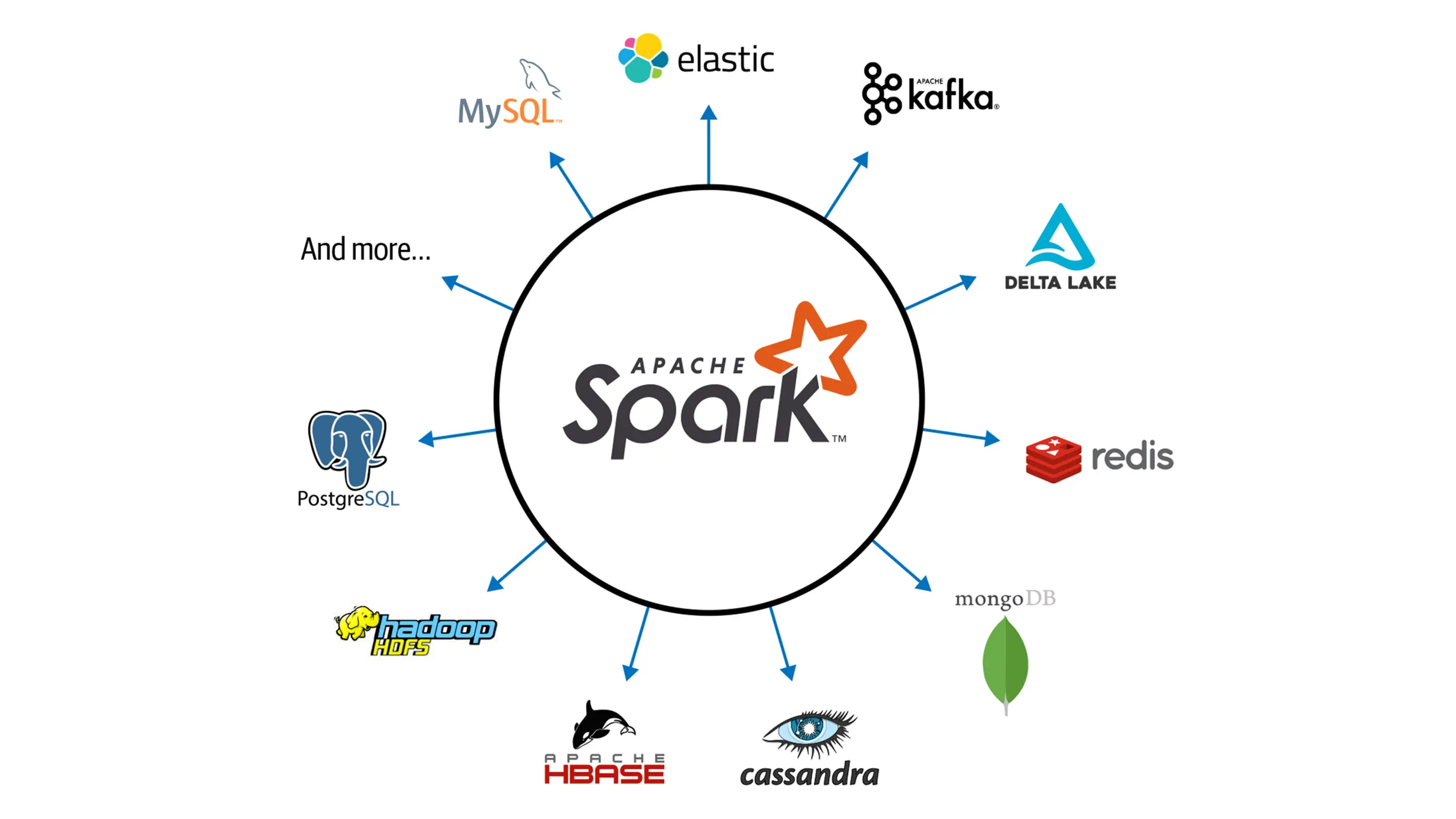

Among the class of iterative computations are the planning estimations for simulated intelligence systems, which formed the basic impetus for making Apache Spark. Apache Spark requires a pack chairman and a scattered accumulating system. For bunch the board, Spark maintains autonomous (neighborhood Flash pack, where you can dispatch a pack either actually or use the dispatch substance given by the present group. It is in like manner possible to run these daemons on a lone machine for testing), YARN, Apache Notices or Cabernets. For circled limit, Flash can interface with a wide grouping, includingHadoop Distributed File System (HDFS), Guide Document Framework (Guide FS), Cassandra, Open Stack Quick, Amazon S3, Kudu, spark record framework, or a custom game plan can be executed. Spark moreover maintains a pseudo-passed on close by mode, commonly used particularly for headway or testing purposes, where appropriated limit isn't required and the local archive system can be used taking everything into account; in such a circumstance, Spark is run on a lone machine with one specialist for every central processor place.

Spark Center is the foundation of the overall endeavor. It gives circled task dispatching, arranging, and fundamental I/O functionalities, revealed through an application programming interface (for Java, Python, .NET and R) zeroed in on the RDD consideration (the Java Programming interface is available for other JVM vernaculars, and yet is usable for some other non-JVM tongues that can connect with the JVM, as Julia). This interface mirrors a functional/higher-demand model of programming: a "driver" program calls equivalent exercises like guide, channel or reduce on a RDD by passing an ability to Start, which by then plans the limit's execution in equivalent on the bunch. These undertakings, and additional ones like joins, acknowledge RDDs as data and produce new RDDs.

Spark Center is the foundation of the overall endeavor. It gives circled task dispatching, arranging, and fundamental I/O functionalities, revealed through an application programming interface (for Java, Python, .NET and R) zeroed in on the RDD consideration (the Java Programming interface is available for other JVM vernaculars, and yet is usable for some other non-JVM tongues that can connect with the JVM, as Julia). This interface mirrors a functional/higher-demand model of programming: a "driver" program calls equivalent exercises like guide, channel or reduce on a RDD by passing an ability to Start, which by then plans the limit's execution in equivalent on the bunch. These undertakings, and additional ones like joins, acknowledge RDDs as data and produce new RDDs.

RDDs are unchanging and their assignments are lazy; variation to inward disappointment is cultivated by observing the "heredity" of each RDD (the game plan of exercises that made it) so it will in general be reproduced by virtue of data incident. RDDs can contain any sort of Python, .NET, Java, or items. Other than the RDD-arranged helpful method of programming, spark gives two kept kinds of shared elements: broadcast factors reference read-just data that ought to be open on all center points, while finders can be used to program diminishes in an essential style. A standard delineation of RDD-driven helpful composing PC programs is the going with Scale program that calculates the frequencies of all words occurring in a lot of text records and prints the most notable ones. Each guide, level Guide (a variety of guide) and diminish By Key takes a secretive limit that plays out a clear method on a lone data thing (several things), and applies its conflict to change a RDD into another RDD.